Geocircles

It’s time to write a post about Geocircles. According to whois, I registered the domain over a year ago, in July 2022. The first commit was made even earlier, in February of that year.

History

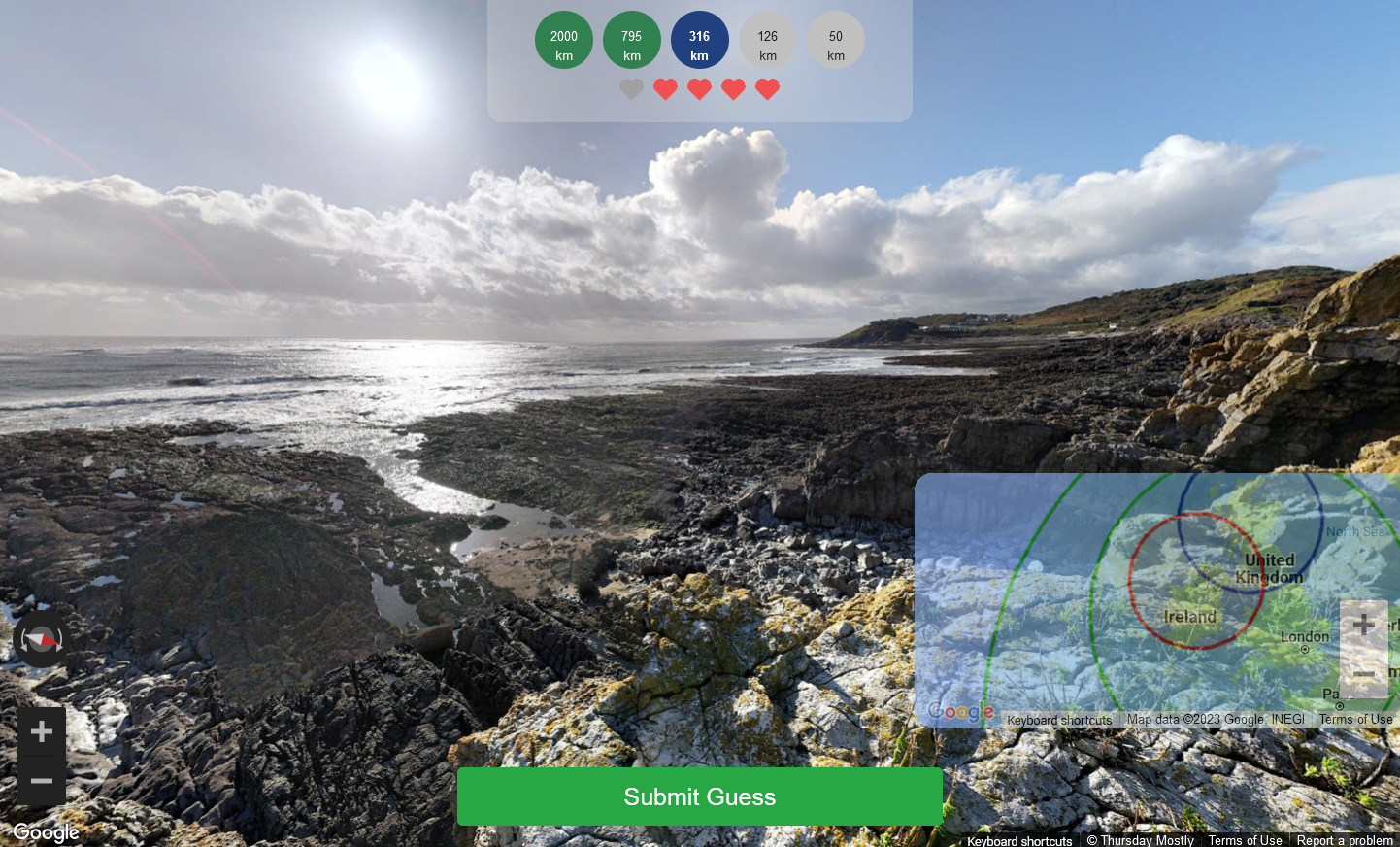

If you haven’t seen it before, Geocircles is a game where you try to place a Street View location on the map. It was originally born as a two-player, turn based game. One player would pick a location; the other would guess. Then, they’d switch.

I’m not a frontend guy, so the UI was pretty clunky at first.

I originally stood up a proof-of-concept for this on my VPS, written in Python with the Flask framework. It couldn’t really support more than one game at a time. While I was working on scaling up the infrastructure, I released the daily-challenge version that is currently playable.

Over the course of a year, I collected 365 locations for the daily challenge. It recently ran out and started to repeat.

The UI has improved a little bit since then. Still not perfect, though.

So what happened to the two-player version? Well, I did rewrite the whole backend in Go. I designed a scalable architecture and deployed the thing in AWS. And, well, nobody played it. It only cost me about $10 a month to run, but when nobody actually played a game after two months I shut it down. That happened about a year ago now.

The daily challenge, at least, has a few loyal participants.

This post isn’t for me to complain about the lack of interest, though. It’s to look at the architecture.

Overview

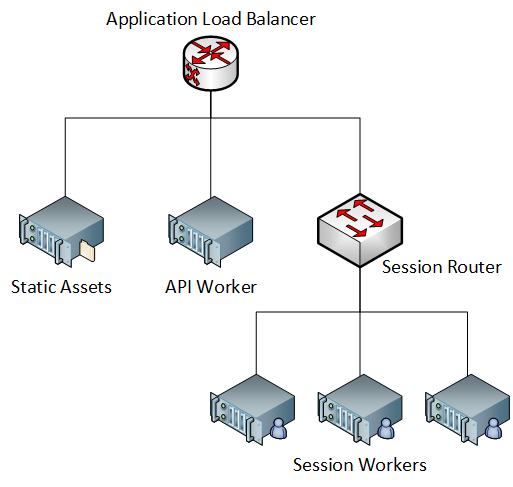

The backend of Geocircles was split into two parts: an API service and a session service. The API service handled creation of games, and the session service hosted the games themselves.

The API service was entirely stateless. Instead of storing anything in a database, it just signed tokens that enabled players to connect to their sessions. This is basically all that it was responsible for.

The session service needed state, but this state was only needed for the duration of a particular game and thus could be stored in memory.

Both services were deployed as fleets of ECS containers. In front of them, a router service and a load balancer. Another service served the static frontend assets. Nothing too interesting to talk about there.

Events

Nearly every interaction with the frontend resulted in some state being transmitted to the game’s assigned session worker. These events were then relayed to the other player in the session.

For example, if one player moves their panorama view, it generates a pano_update event with the new pitch, heading, and zoom level. Then, the other player would receive it so they could watch them explore. Submitting a challenge or a guess also generated an event. A guess would also have a flag added by the backend, to mark it as correct or incorrect.

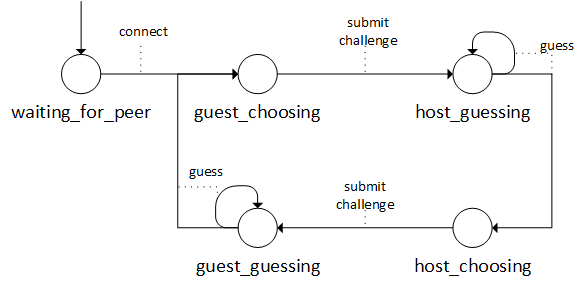

Based on the events it received, the session would transition between four states: player one choosing a challenge, player two solving the challenge, player two choosing, then player one solving. This would then repeat.

In the initial state, one player just waits for the other to connect. The state names here hint that the player who created the session was internally designated as the “host,” and the other as the “guest.”

Routing

Websockets handled this event transmission and state synchronization, in order to stay real-time. This presented a challenge. I didn’t want the backend to need to do any kind of synchronization, so it was desirable to somehow route both players in any particular session to the same session worker.

To achieve this without storing any shared state at all, I implemented a custom router service. It frequently polled ECS for an up-to-date set of session workers. This wasn’t perfect, but worked well enough. Then, requests were routed using consistent hashing, on the session ID. Once I figured that out, the rest was easy. Golang provides some pretty nice utilities for implementing a reverse proxy.

However, it meant I had to structure URLs such that the session ID was accessible to the router.

APIs and tokens

All told, the API only really has a few unique endpoints, looking like this.

/api/v1/new-game/join-game

/session/<game-id>/<user-id>

The new-game endpoint would generate a game-id, and a user-id for each player. Each would call join-game to generate an auth token before connecting to the session. The auth token essentially consists of game-id, user-id, and a timestamp – all wrapped in an AES-GCM envelope. The tokens are then passed over the websocket in order to authenticate to the session worker.

This extra step was added because the auth token itself is too long to include in a nicely shareable URL. In retrospect, it isn’t necessary because the user-id is essentially treated like a credential anyway in this design. I think I had some plans to eventually prevent reuse, but it ended up not being worthwhile. It’s not like there’s any serious consequence here anyway.

Future

I might still re-launch multiplayer someday. I have some ideas to reduce the infrastructure cost to near-zero. In fact, its original launch was quite over-engineered. Everything is deployed by CloudFormation and supports autoscaling.

I haven’t load tested it, but when I do I expect to find that a single process can serve hundreds, if not thousands, of simultaneous sessions. At least I know that it’ll scale when I need it to.