How not to build a gaming PC

Over the past month and a half, I’ve been battling the various ghosts and demons that have apparently taken residence in the ancient server chassis that I am now using as a backend for a Steam Link. Since I doubt anyone else on the Internet is insane enough to do this, I suppose I am mostly making this post for myself to refer back to when it inevitably breaks. If, by chance, you do find this useful, then that is merely a coincidence.

Prologue

My main PC runs Ubuntu, and I have been maining Linux for over a decade now. Most of the games I play have native Linux support or at least work well in Proton. Sometimes, however, a game is more suited to sitting on the couch with a controller than sitting at my desk with a keyboard and mouse. Even in those cases, streaming to a Steam Link attached to my TV works well.

Fall Guys was one such couch game, and unfortunately it also ended my run of smooth sailing with my gaming experience on Linux. With the introduction of an anticheat in late 2020, it would no longer work under Proton. To remedy this situation, I installed Windows on another SSD and I found myself dual booting for the first time in at least eight years.

Now, to play Fall Guys, I just had to get up off the couch, walk to my office, reboot into Windows, log in, then connect to it from the Steam Link.

This was clearly too much work, so it was obviously time to exert even more effort doing something stupid to solve it.

Chapter 1: I buy some hardware

Should the reader of the distant future not recall, it was impossible to buy a GPU from 2020 til at least 2022. New cards were going out of stock instantly only to be slung on eBay for double the MSRP. Even old cards, which might have gone for $100 used a few years ago, now cost hundreds more. Anyway, that’s how I ended up with a Dell OEM Radeon RX 580, a card that is now approaching five years in age (I do not want to discuss how much I paid for it).

What will I be doing with this hunk of old silicon somehow worth its weight in gold, you ask? Why, shoving it in an even older server, of course.

I acquired an HP ProLiant DL380p Gen 8 chassis (for far less than I paid for the GPU, I might add) and attempted to stick the card in it.

Chapter 2: This is the part of the story where I start to dislike HP

The PCI risers that came with the chassis would not accomodate the card. Each (one per socket) held a x16 and two x8 PCIe slots. The x16 was at the top, and thus my two-slot card could not physically be installed.

A few other varieties of risers were available for purchase. One has two x16 slots, and it turns out that is the one that I needed to use. Upon acquiring it (after paying serveral times the value of the three-slot riser I already had) I discovered that for some reason, it can only be installed on the second socket. Yes, for some reason the second x16 slot of this riser would land on a slot that HP for some reason decided would only be x8 on the first socket. Whatever, this isn’t the end of the world. It’s just slightly ridiculous that my gaming VM will have to access resources on both NUMA nodes (since of course the network and SCSI adapters reside on the first socket).

Chapter 3: Did I mention the virtualization?

Ah, yes – virtualization. I installed Proxmox VE on the iron, after a relatively smooth

install with ZFS for root. It is only somewhat annoying that my /boot partition

lies on a flash drive now. Did I mention this chassis doesn’t support UEFI?

I configured the Windows VM with ovmf, pc-q35, host, Virtio SCSI, and everything else the

Internet told me to do to get the best performance. I did the install and configured the basic

necessities, then it became time to attach the GPU.

To passthrough the GPU to the VM, I jumped through several hoops, only some of which were well-documented; some, I found, were unique to the experience of dealing with, well, whatever HP did to this server.

Proxmox provides pretty good documentation on setting up PCI passthrough,

so it was fairly easy to figure out that I needed to add intel_iommu=on iommu=pt vfio-pci-ids=blah,blah to my

kernel commandline. I neglected to read the part about blacklisting the graphics drivers, so it took a few more reboots

(with very, very long POSTs) to remember to do that in order to get the vfio module to actually handle the card.

I attempted to attach the card to the VM, and it failed to start with Operation not permitted. Several hours of

Googling led me to

this post,

which is terrifyingly recent and makes me think I would not have been able to accomplish this feat if I had attempted it only

a year earlier, despite the age of the hardware.

The page he linked to explained some problem I didn’t understand, but sure sounded like what was happening to me. Thus, I spent quite some time struggling to install these HP utilities and after eventual success, started blindly typing commands to flip bits in the BIOS.

Why would HP not make these things configurable in the regular BIOS ROM? Why would I have to acquire a separate XML file that tells it which addresses to poke bits in? *shakes fist at HP*

These mysterious, and frankly frightening, incantations fortunately allowed the VM to finally boot.

Chapter 4: Performance anxiety

After getting the GPU drivers and everything set up, most of the games I was interested in playing (themselves about as old as the server I was running them on) Just Worked – but only half the time. The other half, the VM would boot in a state where everything was unbearably slow.

As previously alluded to, the GPU was bound to NUMA1, so the “half the time” it was slow is when the

VM landed on NUMA0. I Googled for some incantations to pin the VM to a node, and typed

hookscript: local:snippets/pin-numa1.sh into /etc/pve/qemu-server/${vm}.conf.

The hookscript itself, living at /var/lib/vz/snippets/pin-numa1.sh was the following kludge:

#!/bin/bash

if [[ "$2" == "post-start" ]]; then

pid="$(< /run/qemu-server/${1}.pid)"

cpus="8-15,24-31"

taskset --cpu-list --all-tasks --pid $cpus $pid

fi

I found this actually made the problem worse, because for some reason my system preferred

assigning the VM memory that belonged to to NUMA0 rather than NUMA1 now. Further Googling,

I obtained the following spell to put in the VM config:

numa0: cpus=0-15,hostnodes=1,memory=12288,policy=bind.

Now, quite entertainingly, the VM would fail to start because for some reason, other stuff was now consuming too much of NUMA1’s memory to be able to make this reservation.

Fortunately, this machine is old enough to be DDR3 and thus I was able to obtain enough RAM (with DIMMS identical to those I was already running) to expand to 64 GB per socket for, well, less than I paid for anything else that went into this box. This had the added benefit of using all four of the channels available to each socket, instead of just one as was the case before.

Finally, I was able to boot the VM and confirm with numastat that the VM was running on

NUMA1, and its memory was local. Games were running smoothly… most of them, at least.

Chapter 5: Two reasons this post is a month late

There was still something wrong.

Dolphin emulator was running like utter garbage. GameCube games were almost passable, averaging about 25 FPS with occasional lag spikes. Wii games were entirely unplayable. I looked at performance metrics – nothing would indicate that this was too much for the box to handle. I ran again to Google. I found this post which suggested it was a CPU scaling issue. I looked again at the numbers. Indeed, my CPUs were not boosting above their most economical clock. But why? The performance governor was in place, but it did nothing.

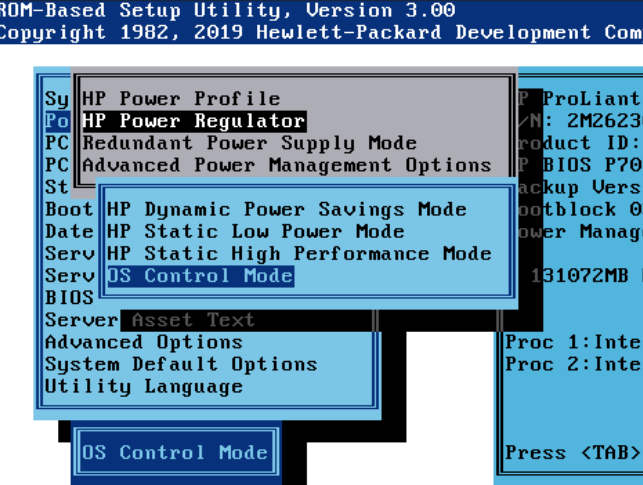

Hours of fruitless debugging later, I recalled something I saw in the BIOS. Yes, the actual BIOS, not the feed-me-some-XML-so-I-can-twiddle-bits commandline utility…

This option was set to “High Performance Mode,” which is code for “fast when HP’s heuristic thinks you’re doing something hard.” Setting it to “OS Control Mode” allowed the governor to work as expected. The emulator now worked (after switching it to DX11 mode – for some reason OpenGL still ran poorly).

One problem remained.

Fall Guys, which inspired this whole adventure, would spontaneously crash. Not just crash, but cause the entire

VM to reset. Fortunately, the iron survived these events, and its dmesg indicated that the graphics card

generated a NMI. Don’t worry, I don’t know what that means either, besides that it’s bad. Bad enough to also

show up in iLO logs with a big red icon next to it.

I could have simply ignored this issue and played all the other games in the meantime instead. But no, I can’t give up now, I’ve done too much to quit so soon.

I don’t even know what I typed into Google to find it, but this kernel patch gave me a vague sense of hope.

I typed echo '1' > /sys/module/kvm/parameters/ignore_msrs into the iron and booted the VM back up. I launched

Fall Guys. I qualified from the first round. No crash. Surely, I will crash out in the next round… Yet, I did not.

I played all the way through to the final, where I didn’t stand a chance for the crown.

However, what I had obtained instead was far more valuable: validation. I added this parameter to GRUB.

Epilogue

About a year ago, I first had the thought that “it would be neat to have a gaming server for the Steam Link to connect to.” Had I known how arduous this journey would be, I likely wouldn’t have embarked. Was it worth it? Probably not. But maybe the real gaming server is the arcane knowledge we picked up along the way.